Effect size and power

Session 9

MATH 80667A: Experimental Design and Statistical Methods

HEC Montréal

Outline

Outline

Effect sizes

Power

Effect size

Motivating example

Quote from the OSC psychology replication

The key statistics provided in the paper to test the “depletion” hypothesis is the main effect of a one-way ANOVA with three experimental conditions and confirmatory information processing as the dependent variable; F(2,82)=4.05, p=0.02, η2=0.09. Considering the original effect size and an alpha of 0.05 the sample size needed to achieve 90% power is 132 subjects.

Replication report of Fischer, Greitemeyer, and Frey (2008, JPSP, Study 2) by E.M. Galliani

Translating statement into science

Q: How many observations should

I gather to reliably detect an effect?

Q: How big is this effect?

Does it matter?

With large enough sample size, any sized difference between treatments becomes statistically significant.

Statistical significance ≠ practical relevance

But whether this is important depends on the scientific question.

Example

- What is the minimum difference between two treatments that would be large enough to justify commercialization of a drug?

- Tradeoff between efficacy of new treatment vs status quo, cost of drug, etc.

Using statistics to measure effects

Statistics and p-values are not good summaries of magnitude of an effect:

- the larger the sample size, the bigger the statistic, the smaller the p-value

Instead use

standardized differences

percentage of variability explained

Estimators popularized in the handbook

Cohen, Jacob. Statistical Power Analysis for the Behavioral Sciences, 2nd ed., Routhledge, 1988.

Illustrating effect size (differences)

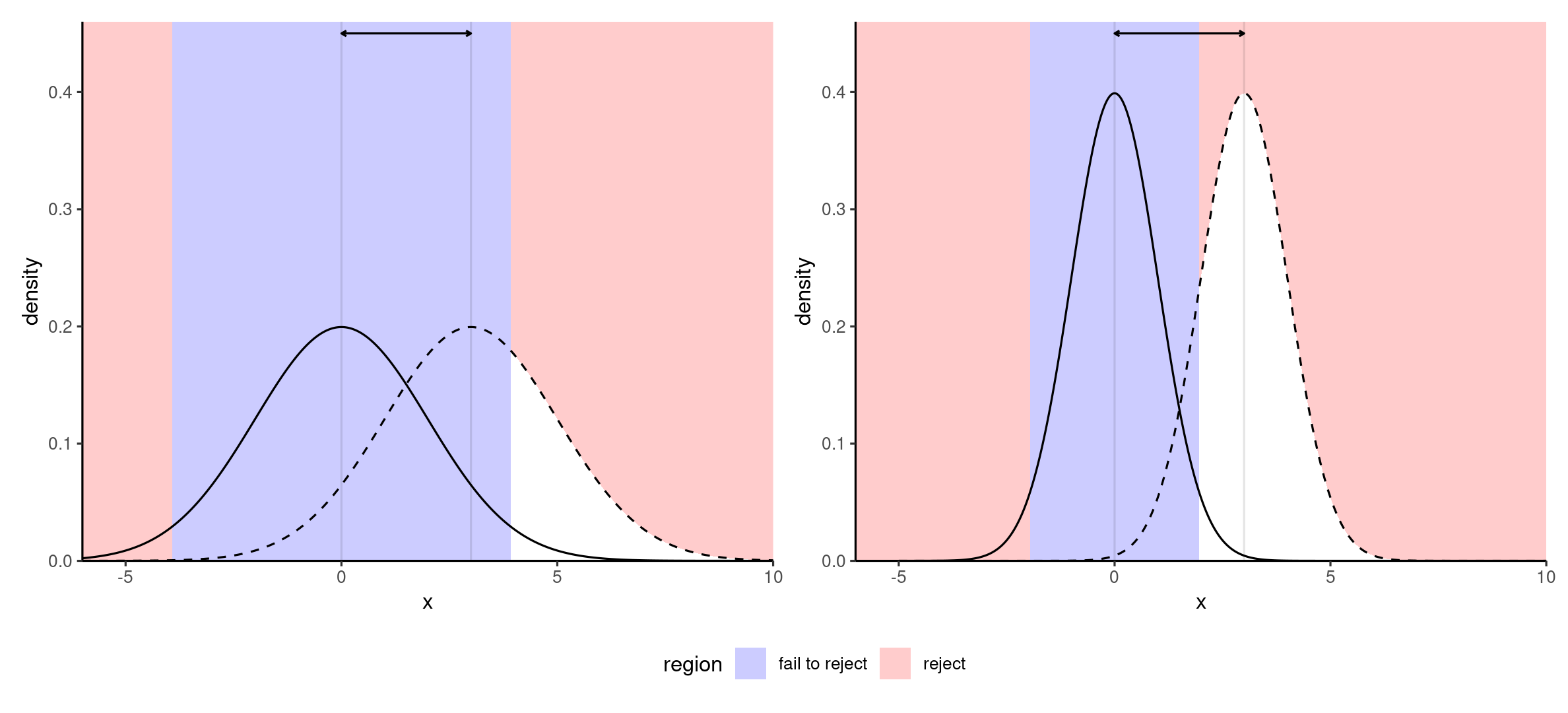

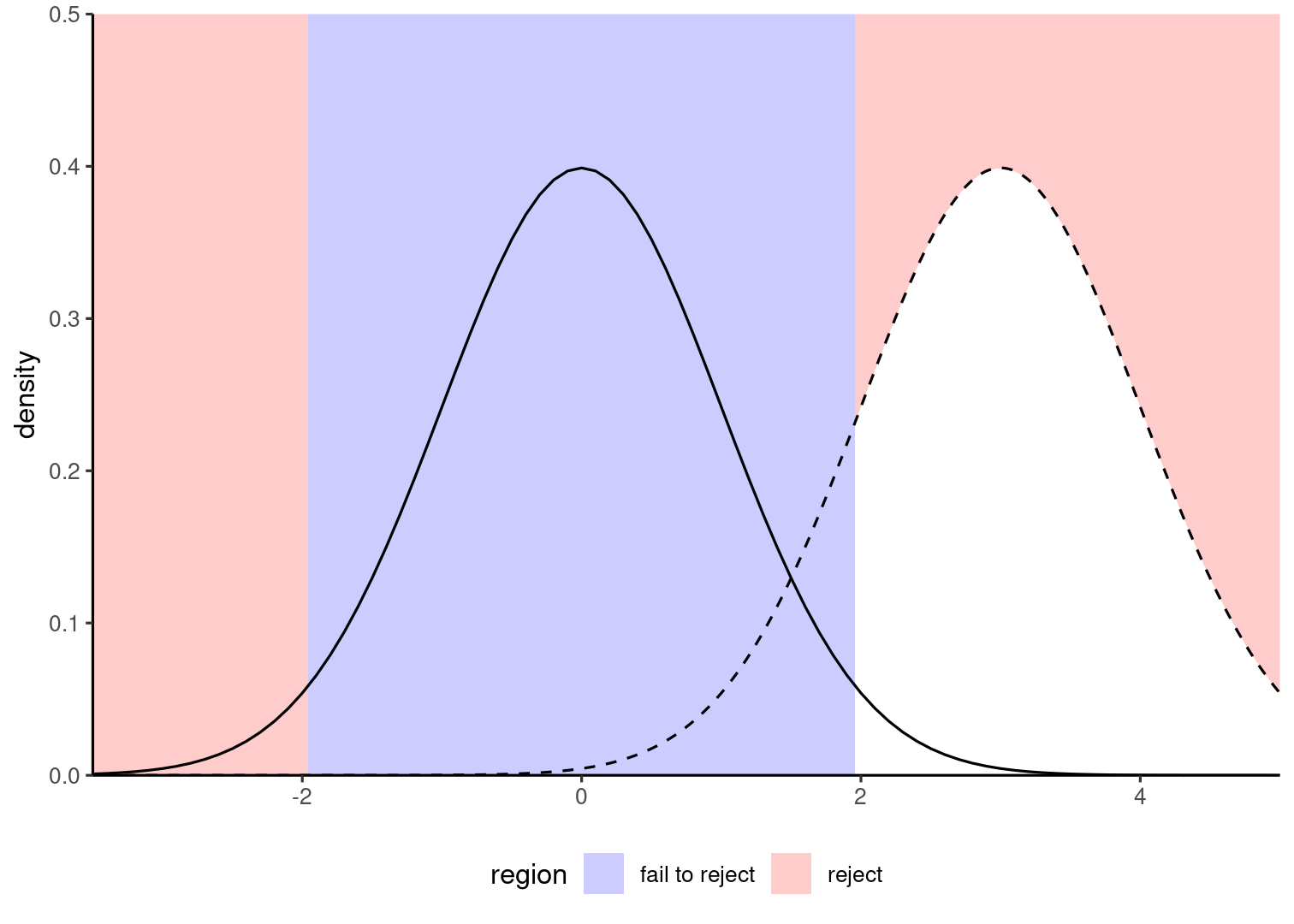

The plot shows null (thick) and true sampling distributions (dashed) for the same difference in sample mean with small (left) and large (right) samples.

Estimands, estimators, estimates

- μi is the (unknown) population mean of group i (parameter, or estimand)

- ˆμi is a formula (an estimator) that takes data as input and returns a numerical value (an estimate).

- throughout, use hats to denote estimated quantities:

Left to right: parameter μ (target), estimator ˆμ (recipe) and estimate ˆμ=10 (numerical value, proxy)

From Twitter, @simongrund89

Cohen's d

Standardized measure of effect (dimensionless=no units):

Assuming equal variance σ2, compare mean of two groups i and j:

d=μi−μjσ

- Usual estimator of Cohen's d, ˆd=(ˆμi−ˆμj)/ˆσ, uses sample average of groups and the square root of the pooled variance.

Cohen's classification: small (d=0.2), medium (d=0.5) or large (d=0.8) effect size.

Note: this is not the t-statistic (the denominator is the estimated standard deviation, not the standard error of the mean).

Note that there are multiple versions of Cohen's coefficients. These are the effects of the pwr package. The small/medium/large effect size varies depending on the test! See the vignette of pwr for defaults.

Effect size: ratio of variance

For a one-way ANOVA (equal variance σ2) with more than two groups, Cohen's f is the square root of

f2=1σ2k∑j=1njn(μj−μ)2, a weighted sum of squared difference relative to the overall mean μ.

For k=2 groups, Cohen's f and Cohen's d are related via f=d/2.

Effect size: proportion of variance

If there is a single experimental factor A, we break down the variability as σ2total=σ2resid+σ2A and define the percentage of variability explained by the effect of A as. η2=explained variabilitytotal variability=σ2Aσ2total.

Coefficient of determination estimator

For the balanced one-way between-subject ANOVA, typical estimator is the coefficient of determination

ˆη2≡ˆR2=Fν1Fν1+ν2 where ν1=K−1 and ν2=n−K are the degrees of freedom for the one-way ANOVA with n observations and K groups.

- The coefficient of determination ˆR2 is an upward biased estimator (too large on average).

- for the replication, ˆR2=(4.05×2)/(4.05×2+82)=0.09.

People frequently write η2 when they mean the estimator ˆR2

ω2 square estimator

Another estimator of η2 that is recommended in Keppel & Wickens (2004) for power calculations is ˆω2.

For one-way between-subject ANOVA, the latter is obtained from the F-statistic as

ˆω2=ν1(F−1)ν1(F−1)+n

- for the replication, ˆω2=(2×3.05)/(2×3.05+84)=0.0677.

- if the value returned is negative, report zero.

Since the F statistic is approximately 1 on average, this measure removes the average.

Link between η2 to Cohen's f

Software usually take Cohen's f (or f2) as input for the effect size.

Convert from η2 (proportion of variance) to f (ratio of variance) via the relationship

f2=η21−η2.

Calculating Cohen's f

Replace η2 by ˆR2 or ˆω2 to get

ˆf=√Fν1ν2,˜f=√ν1(F−1)n

If we plug-in estimated values

- with ˆR2, we get ˆf=0.314

- with ˆω2, we get ˜f=0.27.

Effect sizes for multiway ANOVA

With a completely randomized design with only experimental factors, use partial effect size η2⟨effect⟩=σ2effect/(σ2effect+σ2resid)

In R, use effectsize::omega_squared(model, partial = TRUE).

Partial effects and variance decomposition

Consider a completely randomized balanced design with two factors A, B and their interaction AB. In a balanced design, we can decompose the total variance as

σ2total=σ2A+σ2B+σ2AB+σ2resid.

Cohen's partial f measures the proportion of variability that is explained by a main effect or an interaction, e.g.,

f⟨A⟩=σ2Aσ2resid,f⟨AB⟩=σ2ABσ2resid.

These variance quantities are unknown, so need to be estimated somehow.

Partial effect size (variance)

Effect size are often reported in terms of variability via the ratio η2⟨effect⟩=σ2effectσ2effect+σ2resid.

- Both ˆη2⟨effect⟩ (aka ˆR2⟨effect⟩) and ˆω2⟨effect⟩ are estimators of this quantity and obtained from the F statistic and degrees of freedom of the effect.

ˆω2⟨effect⟩ is presumed less biased than ˆη2⟨effect⟩, as is ˆϵ⟨effect⟩.

Estimation of partial ω2

Similar formulas as the one-way case for between-subject experiments, with

ˆω2⟨effect⟩=dfeffect(Feffect−1)dfeffect(Feffect−1)+n, where n is the overall sample size.

In R, effectsize::omega_squared reports these estimates with one-sided confidence intervals.

Reference for confidence intervals: Steiger (2004), Psychological Methods

The confidence intervals are based on the F distribution, by changing the non-centrality parameter and inverting the distribution function (pivot method). There is a one-to-one correspondence with Cohen's f, and a bijection between the latter and omega_sq_partial or eta_sq_partial. This yields asymmetric intervals.

Converting ω2 to Cohen's f

Given an estimate of η2⟨effect⟩, convert it into an estimate of Cohen's partial f2⟨effect⟩, e.g., ˆf2⟨effect⟩=ˆω2⟨effect⟩1−ˆω2⟨effect⟩.

The package effectsize::cohens_f returns ˜f2=n−1Feffectdfeffect, a transformation of ˆη2⟨effect⟩.

Summary

- Effect sizes can be recovered using information found in the ANOVA table.

- Multiple estimators for the same quantity

- report the one used along with confidence or tolerance intervals.

- some estimators are preferred (less biased): this matters for power studies

- The correct measure may depend on the design

- partial vs total effects,

- different formulas for within-subjects (repeated measures) designs!

Power

Power and sample size calculations

Journals and grant agencies oftentimes require an estimate of the sample size needed for a study.

- large enough to pick-up effects of scientific interest (good signal-to-noise)

- efficient allocation of resources (don't waste time/money)

Same for replication studies: how many participants needed?

I cried power!

- Power is the ability to detect when the null is false, for a given alternative

- It is the probability of correctly rejecting the null hypothesis under an alternative.

- The larger the power, the better.

Living in an alternative world

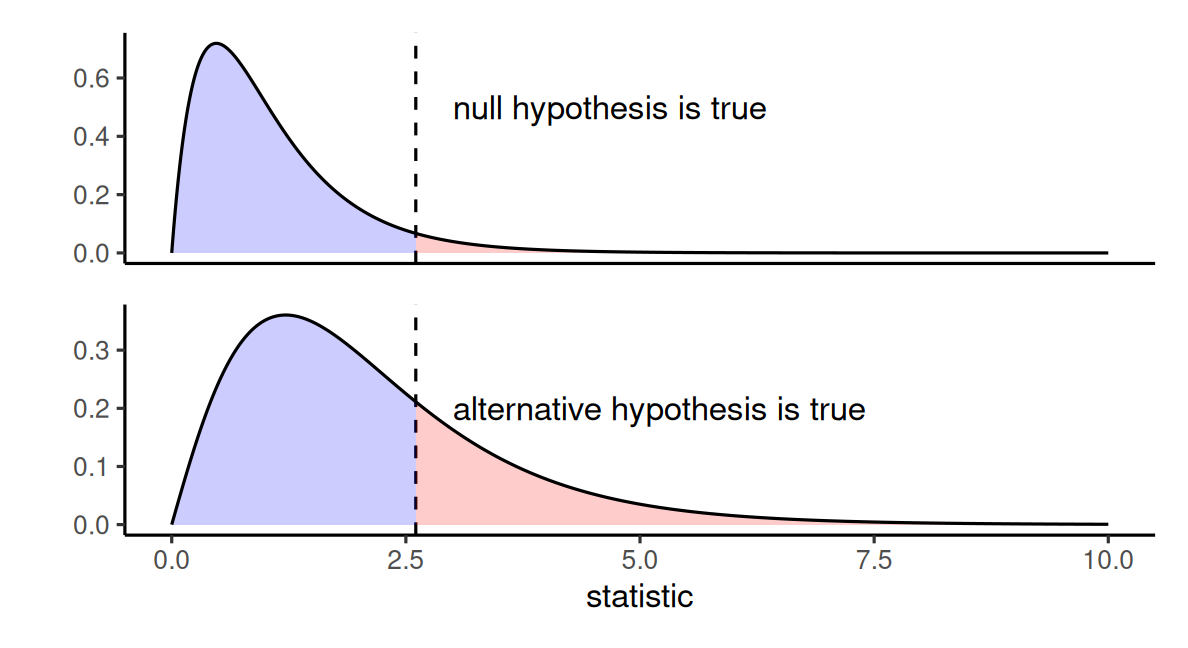

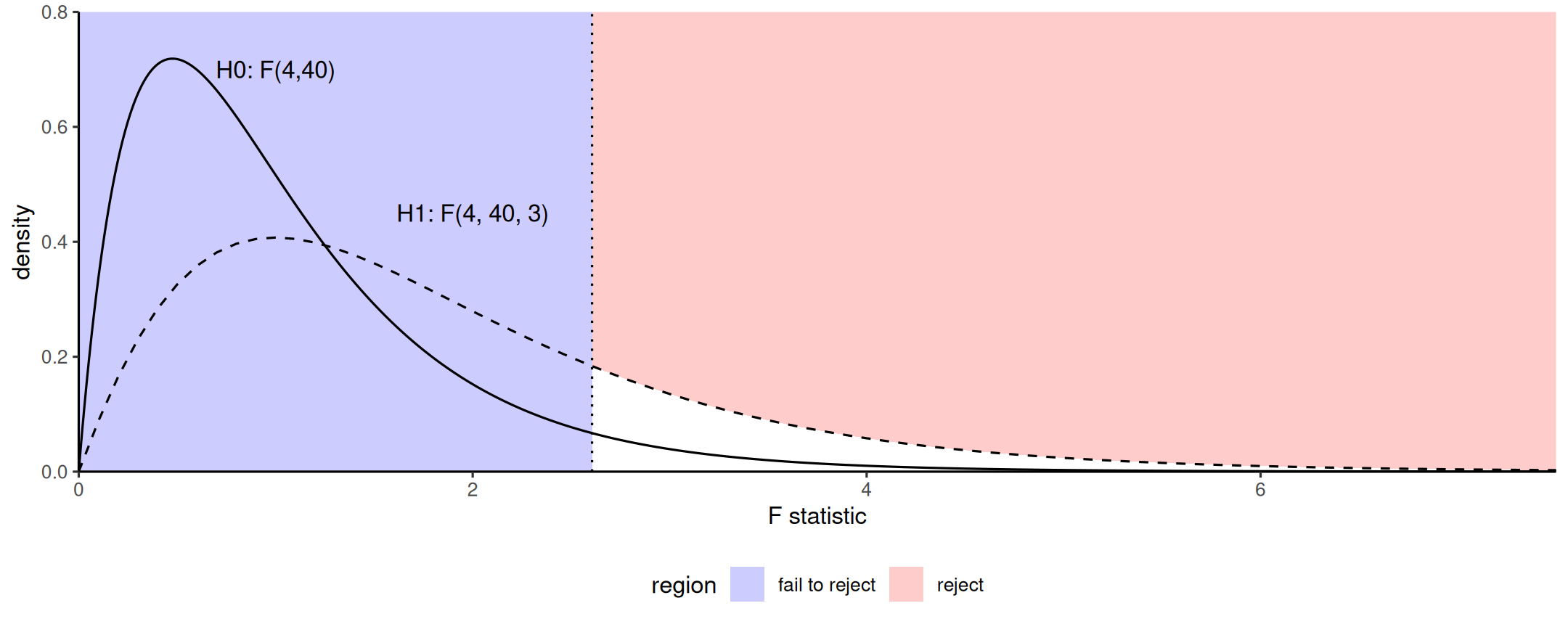

How does the F-test behaves under an alternative?

Thinking about power

What do you think is the effect on power of an increase of the

- group sample size n1,…,nK.

- variability σ2.

- true mean difference μj−μ.

What happens under the alternative?

The peak of the distribution shifts to the right.

Why? on average, the numerator of the F-statistic is

E(between-group variability)=σ2+∑Kj=1nj(μj−μ)2K−1.

Under the null hypothesis, μj=μ for j=1,…,K

- the rightmost term is 0.

Noncentrality parameter and power

The alternative distribution is F(ν1,ν2,Δ) distribution with degrees of freedom ν1 and ν2 and noncentrality parameter Δ=∑Kj=1nj(μj−μ)2σ2.

I cried power!

The null alternative corresponds to a single value (equality in mean), whereas there are infinitely many alternatives...

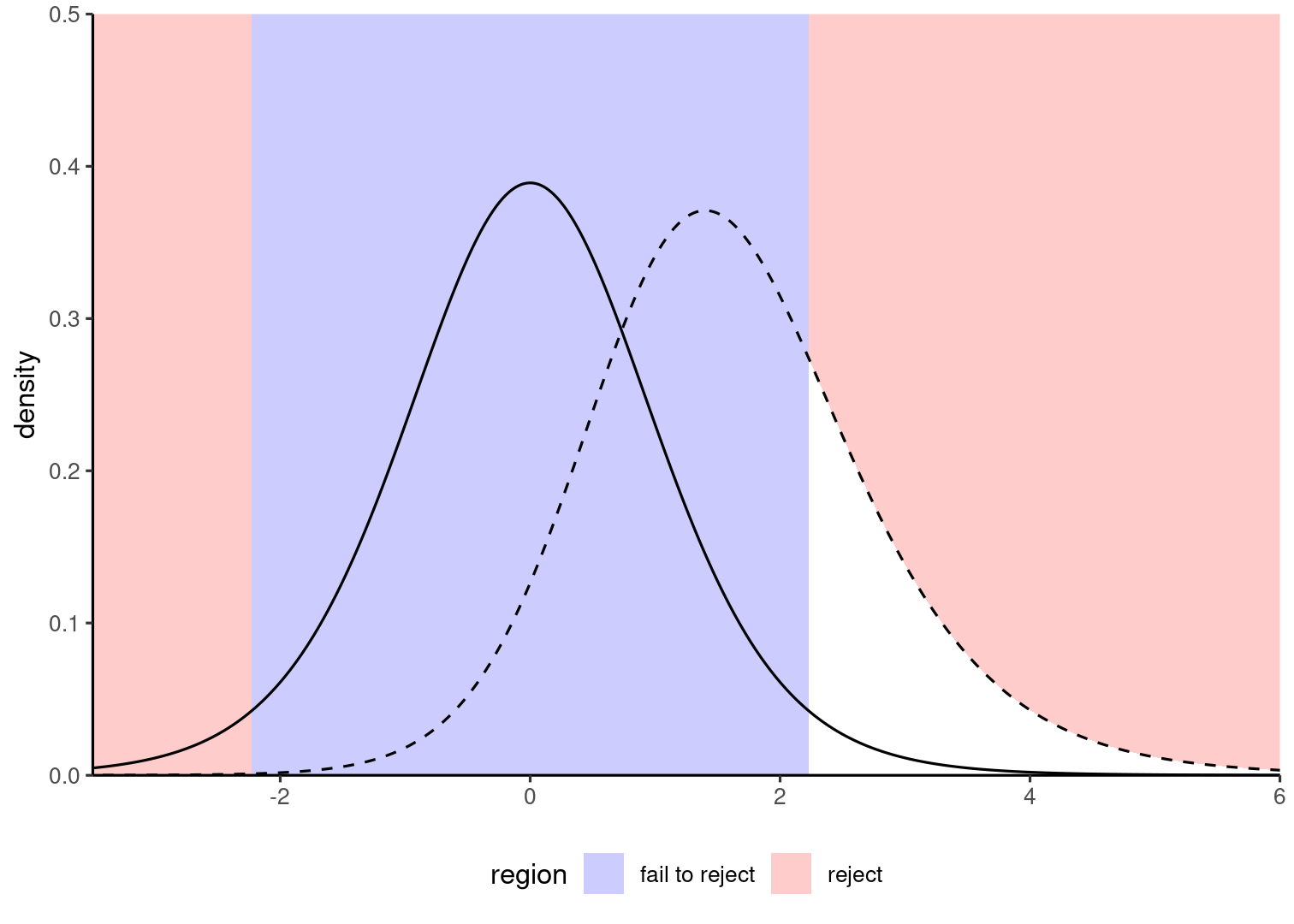

Power is the ability to detect when the null is false, for a given alternative (dashed).

Power is the ability to detect when the null is false, for a given alternative (dashed).

Power is the area in white under the dashed curved, beyond the cutoff.

Power is the area in white under the dashed curved, beyond the cutoff.

In which of the two figures is power the largest?

What determines power?

Think in your head of potential factors impact power for a factorial design.

What determines power?

Think in your head of potential factors impact power for a factorial design.

- The size of the effects, δ1=μ1−μ, …, δK=μK−μ

- The background noise (intrinsic variability, σ2)

- The level of the test, α

- The sample size in each group, nj

- The choice of experimental design

- The choice of test statistic

What determines power?

Think in your head of potential factors impact power for a factorial design.

- The size of the effects, δ1=μ1−μ, …, δK=μK−μ

- The background noise (intrinsic variability, σ2)

- The level of the test, α

- The sample size in each group, nj

- The choice of experimental design

- The choice of test statistic

We focus on the interplay between

effect size | power | sample size

The level is fixed, but we may consider multiplicity correction within the power function. The noise level is oftentimes intrinsic to the measurement.

Living in an alternative world

In a one-way ANOVA, the alternative distribution of the F test has an additional parameter Δ, which depends on both the sample and the effect sizes.

Δ=∑Kj=1nj(μj−μ)2σ2=nf2.

Under the null hypothesis, μj=μ for j=1,…,K and Δ=0.

The greater Δ, the further the mode (peak of the distribution) is from unity.

Noncentrality parameter and power

Δ=∑Kj=1nj(μj−μ)2σ2.

When does power increase?

What is the effect of an increase of the

- group sample size n1,…,nK.

- variability σ2.

- true mean difference μj−μ.

Noncentrality parameter

The alternative distribution is F(ν1,ν2,Δ) distribution with degrees of freedom ν1 and ν2 and noncentrality parameter Δ.

For other tests, parameters vary but the story is the same.

The plot shows the null and alternative distributions. The noncentral F is shifted to the right (mode = peak) and right skewed. The power is shaded in blue, the null distribution is shown in dashed lines.

Power for factorial experiments

- G⋆Power and R packages take Cohen's f (or f2) as inputs.

- Calculation based on F distribution with

- ν1=dfeffect degrees of freedom

- ν2=n−ng, where ng is the number of mean parameters estimated.

- noncentrality parameter ϕ=nf2⟨effect⟩

Example

Consider a completely randomized design with two crossed factors A and B.

We are interested by the interaction, η2⟨AB⟩, and we want 80% power:

# Estimate Cohen's f from omega.sq.partialfhat <- sqrt(omega.sq.part/(1-omega.sq.part))# na and nb are number of levels of factorsWebPower::wp.kanova(power = 0.8, f = fhat, ndf = (na-1)*(nb-1), ng = na*nb)Power curves

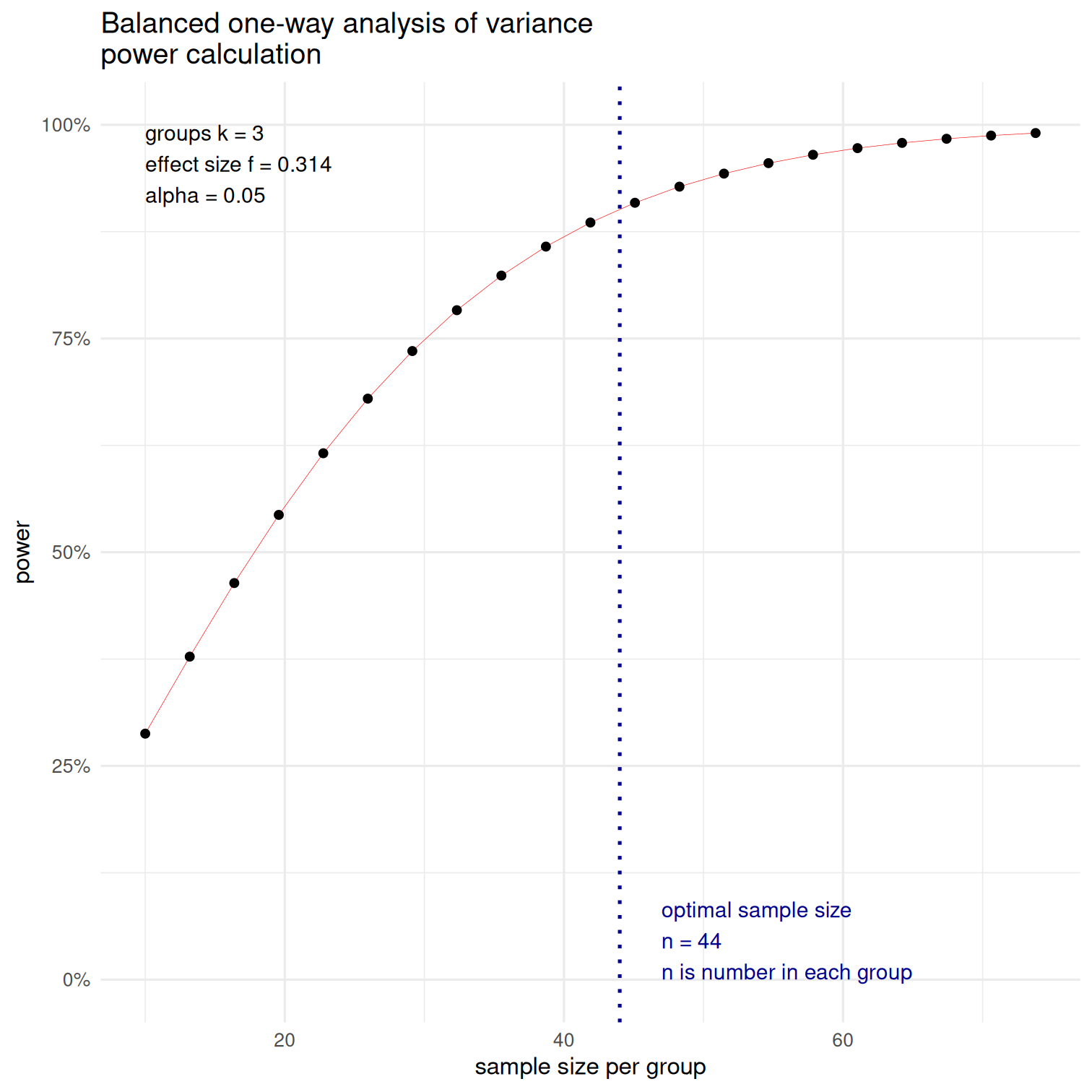

library(pwr)power_curve <- pwr.anova.test( f = 0.314, #from R-squared k = 3, power = 0.9, sig.level = 0.05)plot(power_curve)Recall: convert η2 to Cohen's f (the effect size reported in pwr) via f2=η2/(1−η2)

Using ˜f instead (from ˆω2) yields n=59 observations per group!

Effect size estimates

WARNING!

Most effects reported in the literature are severely inflated.

Publication bias & the file drawer problem

- Estimates reported in meta-analysis, etc. are not reliable.

- Run pilot study, provide educated guesses.

- Estimated effects size are uncertain (report confidence intervals).

Recall the file drawer problem: most studies with small effects lead to non significant results and are not published. So the reported effects are larger than expected.

Beware of small samples

Better to do a large replication than multiple small studies.

Otherwise, you risk being in this situation:

Observed (post-hoc) power

Sometimes, the estimated values of the effect size, etc. are used as plug-in.

- The (estimated) effect size in studies are noisy!

- Post-hoc power estimates are also noisy and typically overoptimistic.

- Not recommended, but useful pointer if the observed difference seems important (large), but there isn't enough evidence (too low signal-to-noise).

Statistical fallacy

Because we reject a null doesn't mean the alternative is true!

Power is a long-term frequency property: in a given experiment, we either reject or we don't.

Not recommended unless the observed differences among the means seem important in practice but are not statistically significant