---

title: "Linear mediation model"

type: docs

editor_options:

chunk_output_type: console

---

Download the [**R** code](/example/linearmediation.R) and the [SPSS code](/example/linearmediation.sps).

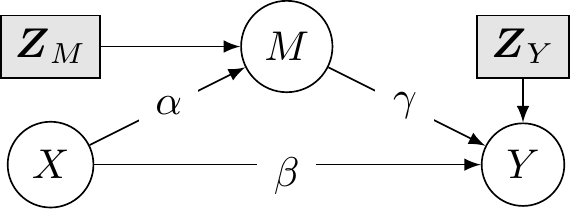

Consider linear regression models in a system where some response variable $Y$ depends on a mediator, $M$, and an experimental factor, $X \in \{0,1\}$.

The mediator model has expected value

$$

M = \underset{\text{intercept}}{c_M} + \alpha X + \underset{\text{control variates}}{g_M(\boldsymbol{Z})} + \underset{\text{error term}}{\varepsilon_M}

$$ {#eq-mediator-model}

while we have for the response

$$

Y = \underset{\text{intercept}}{c_Y} + \underset{\text{direct effect}}{\beta X} + \gamma M + \underset{\text{control variates}}{g_Y(\boldsymbol{Z})} + \underset{\text{error term}}{\varepsilon_Y}, \label{eq-responsemodel}

$$ {#eq-response-model}

The explanatory variables $\boldsymbol{Z}$ are control covariates to account for confounders, and need not be the same in both models (but could be), depending on the functional form $g_M(\cdot)$ and $g_Y(\cdot)$. The error terms $\varepsilon_Y$ and $\varepsilon_M$ are assumed to be **independent** mean-zero errors terms, each with constant variance.

```{tikz}

#| echo: false

#| cache: false

#| label: fig-dag-linearmed

#| fig-cap: "Directed acyclic graph for the linear mediation model."

#| fig-align: 'center'

#| fig-ext: "png"

#| fig-dpi: 300

#| out-width: '60%'

\usetikzlibrary{positioning}

\begin{tikzpicture}[every node/.append style={minimum size=0.5cm}]

\node [draw,circle] (X) at (-2,0) {$X$};

\node [draw,circle] (Y) at (2,0) {$Y$};

\node [draw,circle] (M) at (0,1) {$M$};

\node [draw,rectangle,fill=black!10] (Zy) at (2,1) {$\boldsymbol{Z}$};

\draw [-latex] (X) edge (Y);

\draw [-latex] (M) edge (Y);

\draw [-latex] (X) edge (M);

\node [fill=white,line width=0pt] (beta) at (0,-0.1) {$\beta$};

\node [fill=white,line width=0pt](gamma) at (1,0.5) {$\gamma$};

\node [fill=white,line width=0pt](beta) at (-1,0.5) {$\alpha$};

\draw [-latex] (Zy) edge (Y);

\draw [-latex] (Zy) edge (M);

\end{tikzpicture}

```

In the linear mediation model defined above and represented in @fig-dag-linearmed, the total effect of $X$ on $Y$ is $\beta + \alpha \gamma$ (the average treatment effect, looking at the difference in response between treatment $X=1$ and control $X=0$).

The average causal mediation effect (ACME) or natural indirect effect for treatment at level $x$ is obtained by changing the potential outcome $Y_i\{X, M_i(X)\}$ of individual $i$ for the mediator $M_i(X)$ while fixing the treatment level $X$ to $x$, so

\begin{align*}

\mathsf{ACME} = \mathsf{E}[Y_i\{x, M_i(1)\} - Y_i\{x, M_i(0)\}].

\end{align*}

In the linear mediation model, we get subtituting the value of $M$ from equation @eq-mediator-model into @eq-response-model

$$\begin{align*}

\mathsf{E}[Y_i\{x, M_i(1)\}] &= \underset{\text{intercept}}{c_Y + \gamma c_M} + \underset{\text{ACME}}{\alpha\gamma} + \beta x+ \underset{\text{effect of control variates}}{\gamma g_M(\boldsymbol{Z}) + g_Y(\boldsymbol{Z})}\\

\mathsf{E}[Y_i\{x, M_i(0)\}] &=\underset{\text{intercept}}{c_Y + \gamma c_M} + \beta x +\underset{\text{effect of control variates}}{\gamma g_M(\boldsymbol{Z}) + g_Y(\boldsymbol{Z})}\\

\end{align*}

$$

so the average difference between the two equations is $\mathsf{ACME} = \alpha\gamma$.

# Example 1

We consider an example from Experiment 1 of @Bastian:2014, who experienced the effect of the effect of shared pain (through a manipulation) on bonding.

> This effect of pain remained when controlling for age (p = .048), gender (p = .052), and group size (p = .050). None of these variables were significantly correlated with experimental condition (ps > .136) or perceived bonding (ps > .925). To determine whether the marginal tendency for the pain tasks to be viewed as more threatening than the control tasks mediated the effect of pain on perceived bonding, we conducted a bootstrap analysis (Preacher & Hayes, 2008) using 5,000 resamples. The results of this analysis revealed that threat was not a significant mediator, indirect effect = −0.11, SE = 0.09, 95% CI = [−0.34, 0.03].

There are several problems with the description: while it seems

that some covariate (age, gender, group size) were added to regression models, it is unclear whether they could be confounders, whether their effect is linear and in which (if any model they are included). Stating "bootstrap analysis" is the equivalent of "running a statistical test": so vague it could mean anything, and the fact the output is random does not help with reproducibility.

The response variable $Y$ is `bonding`, the experimental factor `condition` and `threat`, the average ALES subscale about the perception of the physical task, is the postulated mediator.

We use the `mediation` package [@mediationR] for the model; the package certainly isn't needed (nor the PROCESS macros) to run the bootstrap, which we could obtain with a single for-loop. However, it has utilities, notably for checking model assumptions, that are convenient.

```{r}

#| message: false

library(mediation, quietly = TRUE)

data(BJF14_S1, package = "hecedsm")

MsX <- lm(threat ~ condition + gender + groupsize + age,

data = BJF14_S1)

YsXM <- lm(bonding ~ condition + threat + gender + groupsize + age,

data = BJF14_S1)

```

::: {#tbl-panel layout-ncol=2}

```{r}

#| eval: true

#| echo: false

#| label: tbl-responsecoefs

#| tbl-cap: "outcome model"

coefs1 <- summary(MsX) |> broom::tidy()

coefs1$term[2:4] <- c("condition [pain]", "gender [female]", "group size")

coefs2 <- summary(YsXM) |> broom::tidy()

coefs2$term[c(2,4,5)] <- c("condition [pain]", "gender [female]", "group size")

kab1 <- knitr::kable(coefs1[,1:3], digits = 2, format = "html")

kab2 <- knitr::kable(coefs2[,1:3], digits = 2, format = "html")

kab2 |> kableExtra::kable_styling()

```

```{r}

#| tbl-cap: "mediation model"

#| label: tbl-mediationcoefs

#| eval: true

#| echo: false

kab1 |> kableExtra::kable_styling()

# knitr::kables(x = list(kab2, kab1)) |>

# kableExtra::kable_styling()

```

Coefficients of the regression models

:::

Both of the `threat` and `bonding` measures are average of Likert scales. We include the controls in the regression for the response to account for potential confounding between threat level and shared bonding: it is unclear whether the authors used the control covariates or whether these make sense.

```{r}

#| eval: false

#| echo: true

#| cache: true

#| message: false

set.seed(80667)

linmed <- mediate(

model.m = MsX,

model.y = YsXM,

sims = 1000L, # number of bootstrap simulations

boot = TRUE, # use bootstrap

boot.ci.type = "perc", # type of bootstrap: percentile

mediator = "threat", # name of mediator

treat = "condition", # name of treatment

control.value = "Control", # name of control (level of 'condition')

treat.value = "Pain") # name of treatment (level of 'condition')

summary(linmed)

```

```{r}

#| label: tbl-mediation

#| eval: true

#| echo: false

#| cache: true

#| message: false

#| tbl-cap: "Linear causal mediation analysis: parameter estimates, nonparametric bootstrap 95% confidence intervals and p-values with the percentile method based on 5000 bootstrap samples."

set.seed(80667)

linmed <- mediate(

model.m = MsX,

model.y = YsXM,

sims = 1000L, # number of bootstrap simulations

boot = TRUE, # use bootstrap

boot.ci.type = "perc", # type of bootstrap: percentile

mediator = "threat", # name of mediator

treat = "condition", # name of treatment

control.value = "Control", # name of control (level of 'condition')

treat.value = "Pain") # name of treatment (level of 'condition')

x <- summary(linmed)

smat <- c(x$d1, x$d1.ci, x$d1.p)

smat <- rbind(smat, c(x$z0, x$z0.ci, x$z0.p))

smat <- rbind(smat, c(x$tau.coef, x$tau.ci, x$tau.p))

smat <- rbind(smat, c(x$n0, x$n0.ci, x$n0.p))

rownames(smat) <- c("ACME", "ADE", "total effect", "prop. mediated")

clp <- 100 * x$conf.level

colnames(smat) <- c("estimate", paste0("lower ", clp, "% CI",

sep = ""), paste0("upper ", clp, "% CI", sep = ""), "p-value")

knitr::kable(smat,

digits = 3,

booktabs = TRUE) |>

kableExtra::kable_styling()

```

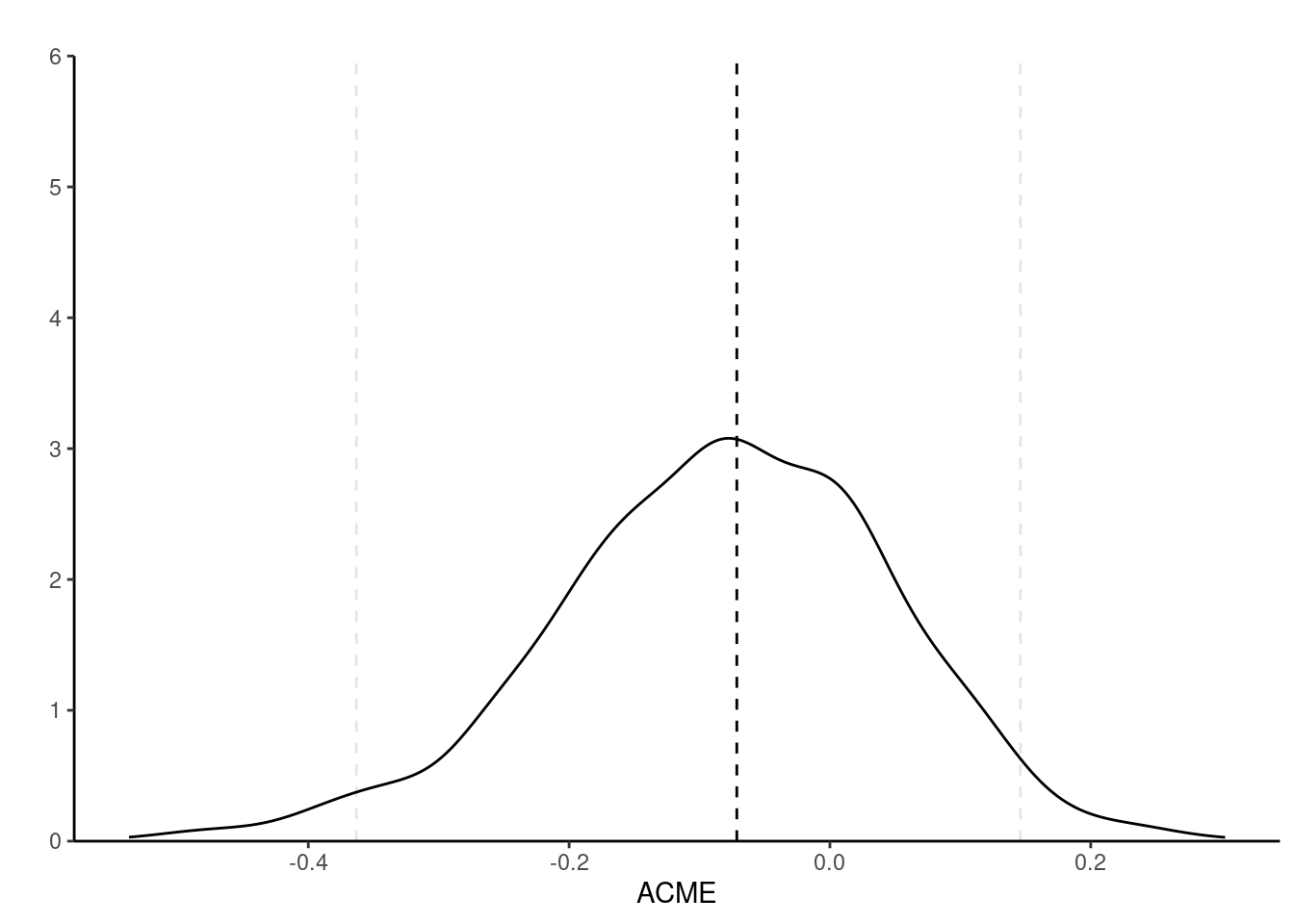

The first line of @tbl-mediation gives the average reverse natural indirect effect or average conditional mediated effect (labelled ACME), the second the average direct effect (ADE) and the third the total effect (ADE + ACME). The point estimate for ACME, $\alpha\gamma$ is $\widehat{\alpha}\widehat{\gamma} = `r round(coef(YsXM)['threat'] * coef(MsX)['conditionPain'], 2)`$.

The bootstrap sampling distribution is skewed to the left, a fact reflected by the asymmetric percentile confidence interval.

```{r}

#| eval: true

#| echo: false

#| label: fig-densityACME

#| fig-cap: "Density of the 1000 nonparametric bootstrap estimates of the average conditional mediation effect $\\alpha\\gamma$, with point estimate and 95% percentile bootstrap confidence intervals (vertical dashed lines)."

library(ggplot2)

ggplot(data = data.frame(x = linmed$d1.sims),

aes(x = x)) +

geom_vline(xintercept = linmed$d1, linetype = "dashed") +

geom_vline(xintercept = quantile(linmed$d1.sims,

c(0.025, 0.975)),

linetype = "dashed", alpha = 0.1) +

geom_density() +

scale_y_continuous(limits = c(0,6), expand = c(0,0)) +

theme_classic() +

labs(y = "", x = "ACME",

subtitle = "")

```

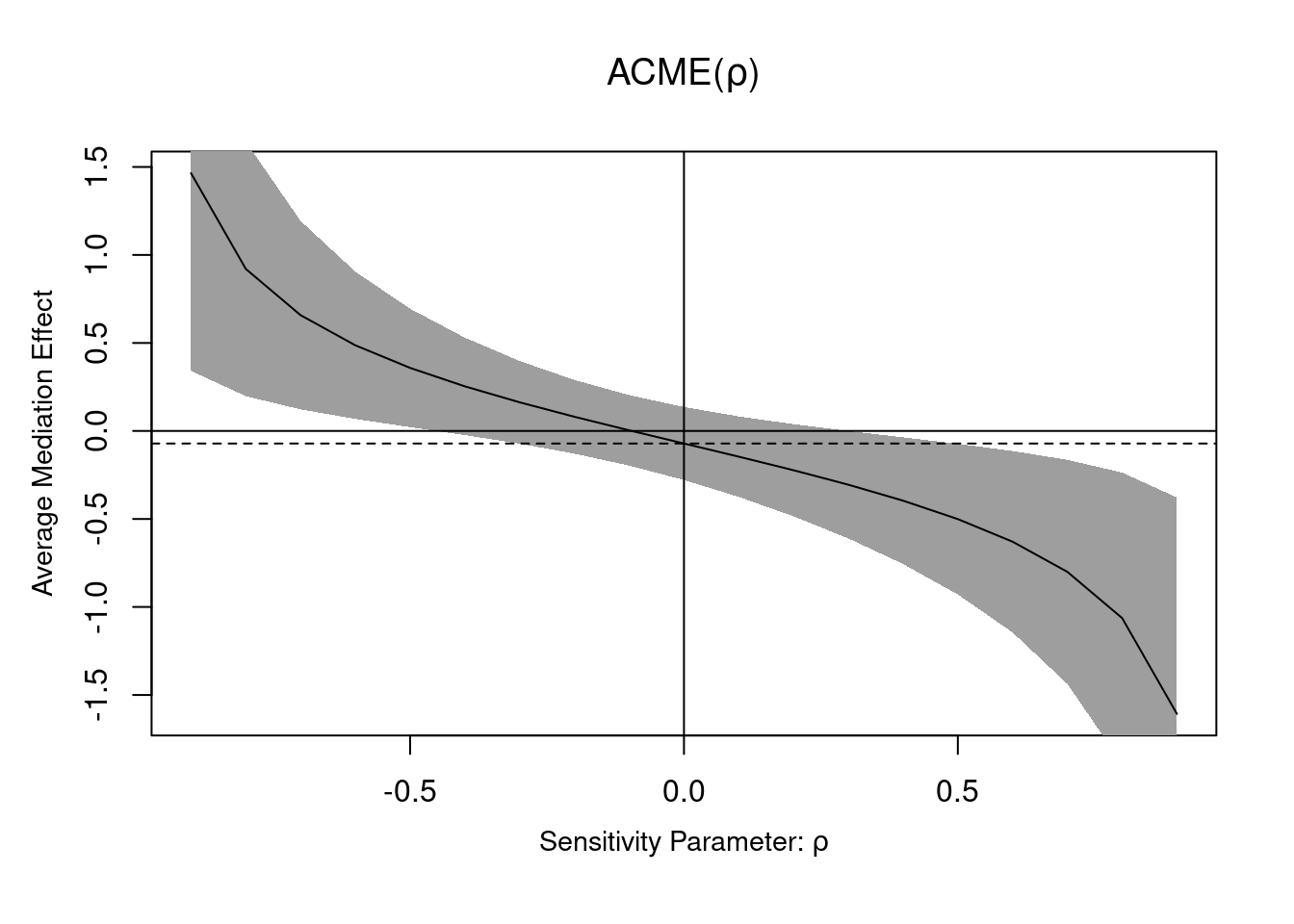

The sequential ignorability assumption cannot be verified, but we can see what impacts violations would have on the coefficients: the expected value of the coefficient $\widehat{\gamma}$ is $\gamma + \mathsf{Cov}(\varepsilon_M, \varepsilon_Y)/\mathsf{Va}(\varepsilon_M)$; the second component is a bias term that does not vanish, even when the sample size grows [@Bullock.Green.Ha:2010]. The variance of the error of the mediation and response models can be estimated, and we can vary the correlation coefficient, $\rho=\mathsf{Cor}(\varepsilon_M, \varepsilon_Y)$, to assess the sensitivity of our conclusions if there was confounding.

```{r}

#| eval: true

#| echo: true

linmed_sensitivity <- medsens(linmed)

summary(linmed_sensitivity)

plot(linmed_sensitivity)

```

The `medsens` function implements the sensitivity diagnostic presented in Section 5.1 of @Imai.Keele.Yamamoto:2010 for the linear mediation model. By default, the correlation $\rho$ varies in 0.1 increments. We can see the wide range of the ACME if there was correlation between residuals from the mediation and the response model, highlighting the wide range of values that could be returned: the ACME could go from $0.2$ to $-0.288$ for correlations in the range $\rho \in [-0.4, 0.4]$. In this example, nearly any correlation in this range would lead to "insignificant results", mostly because of the small sample size. In a situation where we had found a significant (sic) result, we could observe how much correlation btween would be needed for this effect to be an artifact of correlation and vanish.

According to the documentation of the `medsens` function (`?medsens`), there are two variants of the estimated effect size, either computing the proportion of the total (tilde, `R^2_M~R^2_Y`) or residual (starred, `R^2_M*R^2_Y*`) variance from the mediation and outcome models that are due to hypothetical unobserved confounders.